Watch Motion

First try with on-device inference

Project: Watch Motion

- Getting Started

- Data Collection

- More Training Data

- First try with on-device inference

- A journey through raw data

- Session recording

- More to come...

I'm still collecting data for the model, but I thought I should try to do some on-device inference to get a feel for how it performs and if it's fast enough. I used the training data I have collected so far and training a very naive model without much preprocessing. The model is trained on a window of 500 samples (5 seconds at 100Hz), and I run the inference every 100 samples (using the latest 500 samples).

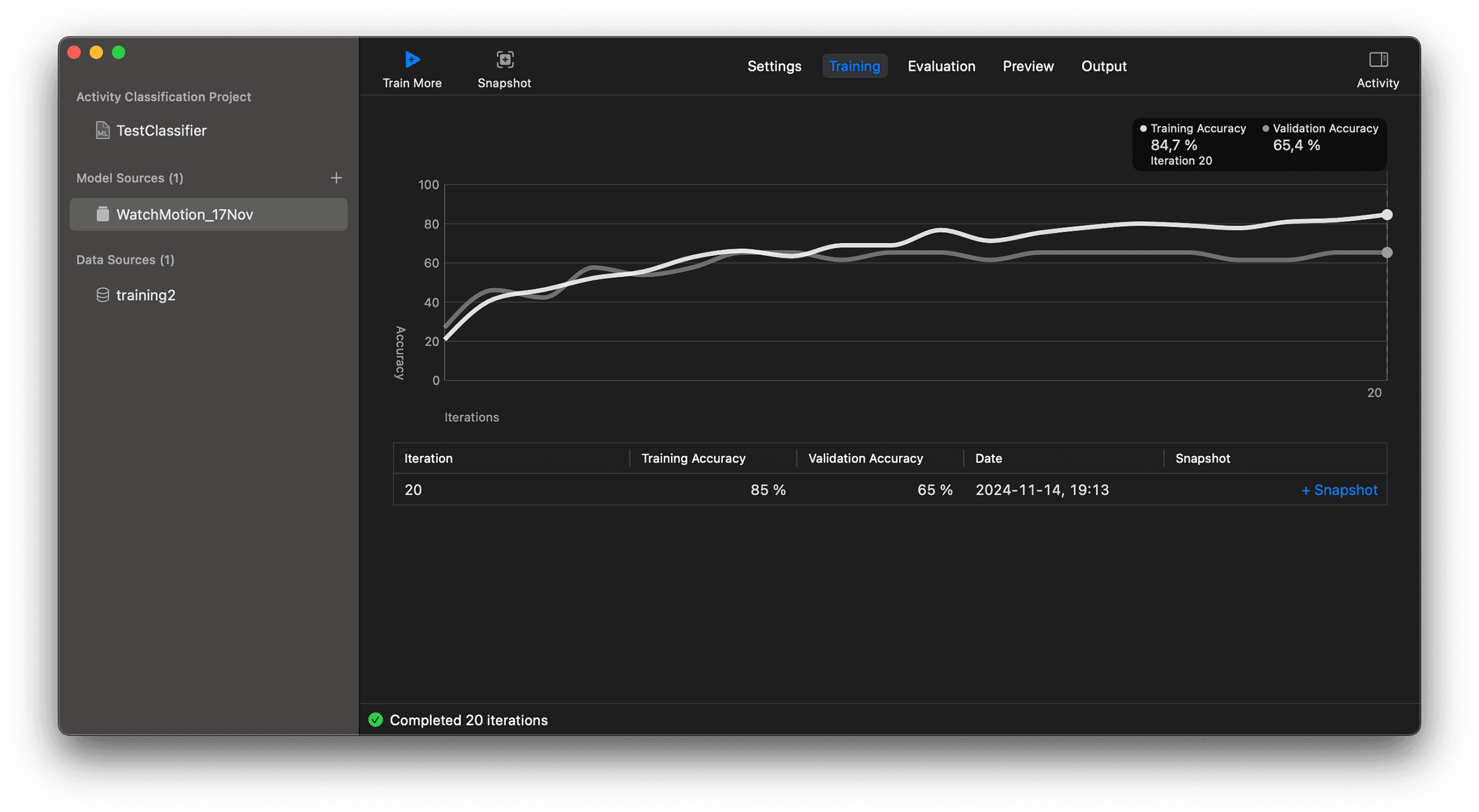

To get started, I downloaded the data from DynamoDB with a simple Python-script and saved it to disk as JSON files. I then did some very simple preprocessing, just normalizing the data and splitting it into training and validation sets. I'm using CreateML to train the model, it's great for getting started but gives very little control over the training process so I will probably switch to TensorFlow or PyTorch later. This is what it looks like after training for 20 epochs: